Technical Time Travel: On Vintage Programming Books

01-29-2022 | A look at three relics of a not-quite-bygone era. In picture-listicle form.

01-31-2022 update: Reddit discussion here. "The Mythical Man-Month" by Frederick Brooks (1975) was highly recommended!

03-20-2022 update: Hacker News discussion here. Interesting perspectives and vintage recommendations.

As technologists, we're constantly gulping from the bleeding-edge firehose: new versions, new standards, new frameworks, new paradigms. This is largely a good thing. Most advances offer a tangible improvement over the status quo. Specialization (e.g. recent Bachelors Degrees in AI) speeds the advance of promising fields, it's a future of exciting possibility. So long as our eager technology can be guided by effective policy.

What if we turn that lens backward, toward the yesteryear innovations of our shared past? Not in an effort to gain some competitive edge in the present - although the insight of historical context can be piercing - but simply to satisfy intellectual curiosity. To scratch that innocent itch for understanding how things work. Or, given hindsight, why they didn't.

To this end, I enjoy collecting rare and vintage programming books. It's not a hobby that leads to party invitations. But it is surprisingly cheap (the demand for used and outdated technical references is small). And mildly satisfying. There's just something quaint about pre-internet computer books, with their dead-tree print editions and crude typesetting. Each offering an alluring nostalgia, somehow palatable even if the era is before your own.

This post shares three of my favorites and summarizes the historical backdrop for each. All three have ties to modern-day tech, so I hope you'll find some aspect informative or interesting. Hit the gas until 88 MPH, we're going back Marty!

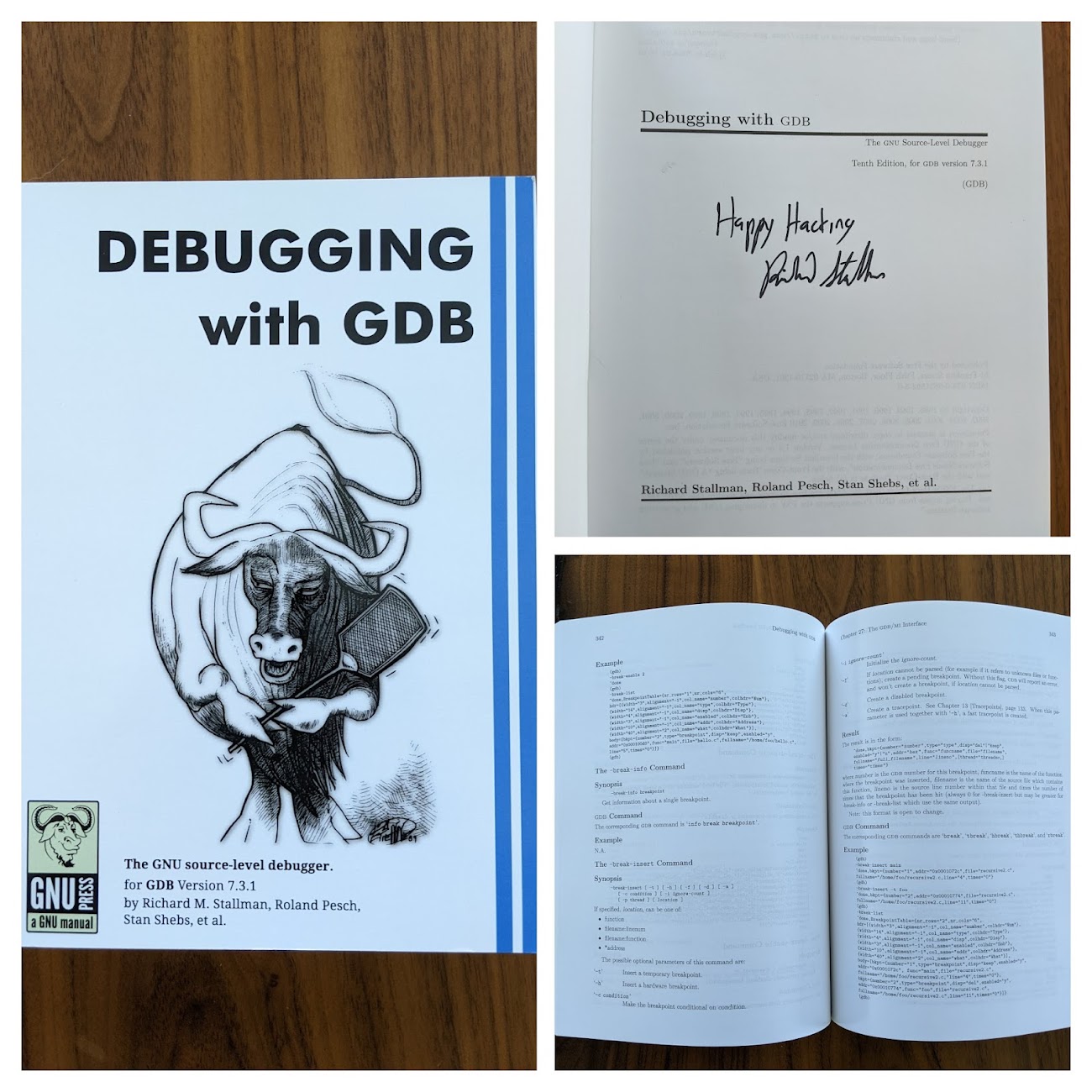

1) Debugging with GDB by Richard Stallman et al. (1988 - Present)

Having done academic research in dynamic program analysis, I have something of a soft spot for debuggers and emulators. The 1st edition of the long-running "Debugging with GDB" series was copyrighted in 1988. In fitting Free Software Foundation (FSF) fashion, the most current edition is freely available online. It's the comprehensive reference for gdb - a userland debugger supporting Unix-like systems.

gdb is a cornerstone of the incredibly influential GNU toolchain. This toolchain includes, among others, the gcc compiler, the glibc C standard library, and the make build system - software fundamental to both the rise of the Linux kernel and systems programming in general. Because GNU technologies are so ubiquitous on the server-side, almost all of your daily computer use is, in some direct or indirect way, enabled by GNU utilities. This handful of tools annually generates billions of dollars in value.

Dr. Richard Stallman, the original author of several GNU programs, personally signed this 2010 copy when I met him at a software conference in 2016. I distinctly remember people getting up and walking out halfway through his conference-closing keynote, which espoused the exploitative nature of cloud services. Categorically. Any and all usage of remote machines running code managed by another party. Though no doubt an icon of computing, Stallman could be described as an abrasive speaker.

While Stallman's grandiose vision for collective ownership of all software did not come to pass, it certainly left a mark. Today's open ecosystems are likely a result of the FSF shifting our Overton window. And we're all reaping the benefits. Daily.

Classic Problems, Modern Solutions

gdb/rris one of the few late 80s developer tools still popular today. With forward and reverse engineers alike, nonetheless. Perhaps it's unsurprising that several recent startups ship debugging and observability products. The Total Addressable Market (TAM) for a timeless need is sizable.Some of these startups include (listed alphabetically, non-exhaustive, and not an endorsement of any products): logrocket.com, metawork.com, replay.io, and tetrane.com.

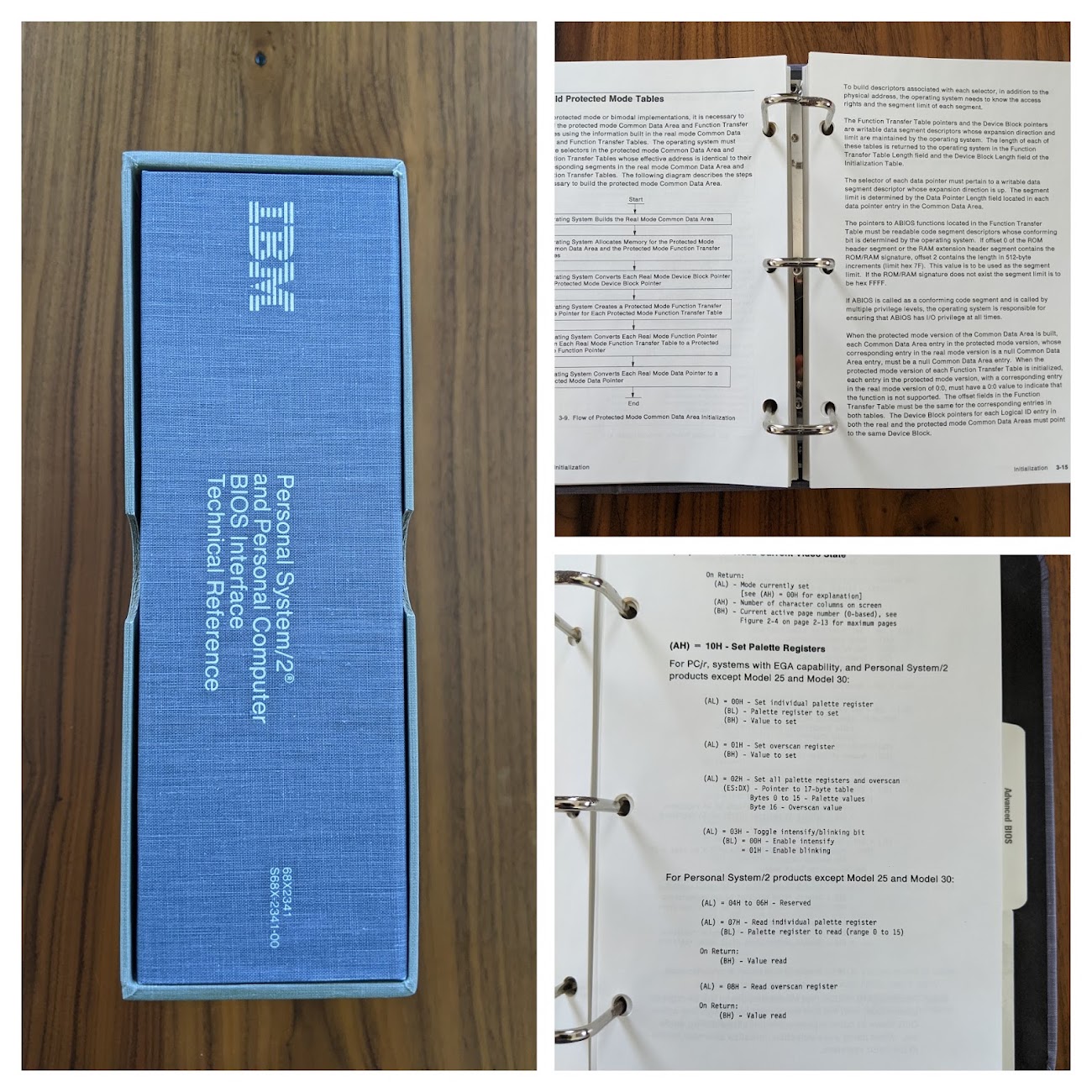

2) BIOS Interface Technical Reference by IBM (1987)

"Clean-room design" was an underhanded way to legally reverse engineer and clone a competitor's product. It works like this: engineer A produces a specification after studying the competing product, a lawyer signs off on the spec not including copyrighted material, and engineer B re-implements the product from the spec A created. A and B have the same employer, but since they're not the same person there's technically no copyright infringement. This technique was used during the fiercely-competitive market rush of early personal computing.

Perhaps its most infamous application was the recreation of IBM's Basic Input-Output System (BIOS). IBM's Personal Computer (PC) introduced home computing to an entire generation, ushering in a new era. It catalyzed mainstream adoption of general-purpose computers, which had largely been corporate tools and hobbyist curiosities. By reverse engineering its BIOS, a piece of low-level firmware, competitors could reduce time-to-market for software-compatible clones of IBM's flagship consumer product.

That's exactly what successful companies like Compaq Computer and Phoenix Technologies did. One team obtained IBM technical manuals, likely very similar to the book pictured above, and wrote specifications that described program behavior without including any of the book's code. Another team wrote a BIOS clone compliant with the specs, likely in assembly language, and then ran PC software atop the replica to check that it functioned as intended. Unethical? Probably. Effective? Definitely.

Dominance via Interoperability

Almost all modern laptops/desktops/servers are direct descendants of the 1981 IBM PC, and use an updated version of its x86 architecture. It's a legacy of enduring monoculture. So why did the PC dominate so convincingly?

Perhaps because it was a break from tradition for Big Blue, Sean Haas posits. Whereas the company's mainframe machines used a proprietary tech stack, the PC was open and modular, readily supporting 3rd-party hardware and software. Its design team prioritized both time-to-market and production capacity by intentionally mating mass-produced 8-bit peripherals to their 16-bit CPU (a variant of the venerable Intel 8086). Had different choices been made, maybe Apple's recent ARM SoC wouldn't be so notable an exception to the x86 rule.

BIOS may have been sunset in favor of UEFI, but the 8086 CPU refuses to die. To this day, your brand new 64-bit x86 PC or console will boot into "real-mode" on startup - ready to execute 16-bit instructions with a whopping 1MB of addressable memory. It's a strange bit of technical debt, an artifact of a 40-year commitment to backward compatibility. And likely to be around for 40 more.

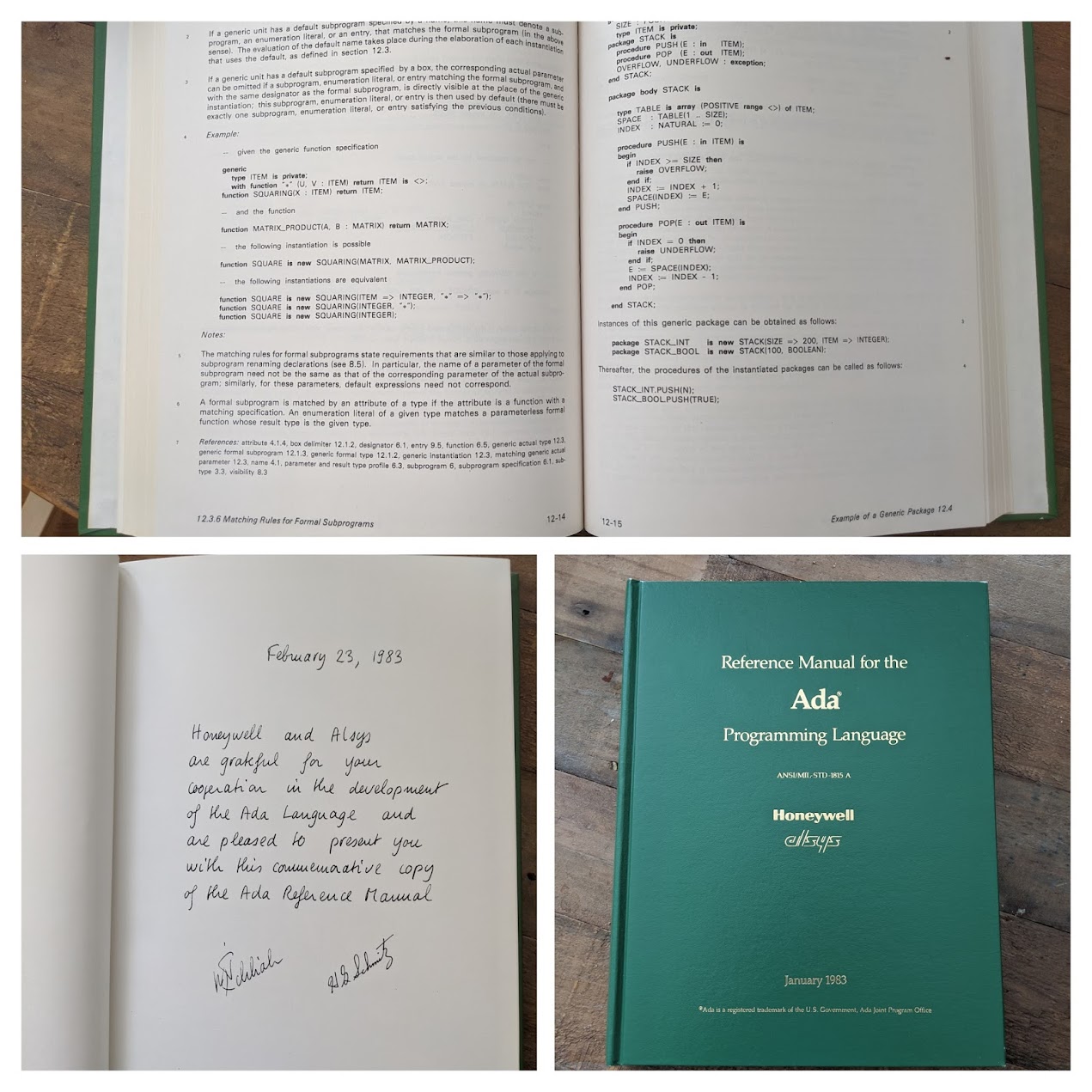

3) The Ada Reference Manual by Honeywell (1983)

In the late 70s, the US Department of Defense (DoD) had a critical problem: their embedded systems projects used over 450 esoteric programming languages, maintenance was a multi-billion dollar burden. The government's answer? Standardization. Create a new language specially tailored to safety-critical embedded systems and legally mandate its use for applicable projects.

In 1978, the DoD put forth the "Steelman" requirements list and sponsored a competition (not unlike the more contemporary DARPA "Grand Challenges"). Four language design teams entered the fray: red, green, blue, and yellow. The green team won and produced the above language specification, hence the binding color. This one is a commemorative copy with a forward thanking the original Honeywell development team.

Their language was named "Ada", after Ada Lovelace - a pioneering programmer who debugged her yet-to-be-built mechanical computer via mail correspondence. The spec's official document number, MIL-STD-1815-A, includes Lovelace's 1815 birthdate.

On Modern Ada

Today's Ada boasts impressive features rarely seen in other programming languages. Including range types (encoding valid ranges of values at the type system level) and, for the SPARK subset, deductive verification (hand-written Hoare logic specifications, proven via SMT solving at compile-time).

SPARK Ada has made recent advances in heap memory verification. Nvidia chose it over Rust for low-level firmware in 2019 - citing Rust's lack of certification for safety-critical systems as one consideration. But Ada hasn't quite taken off in the mainstream. Social factors - perhaps association with restrictive licenses and a proprietary compiler - have constrained adoption. Which is a shame, the language's feature set remains compelling.

Closing Thoughts

What we think of as digital antiquity is, within the timescale of human existence, too recent to truly warrant the label "history". There are Ada early-adopters still employed as developers today. The GNU General Public License (GPL) is still chosen for new projects. As a child of the 90s, I have fond memories of the American Megatrends BIOS clone splash screen - illuminated via the fuzzy glow of a CRT monitor.

The pace of technological progress is blistering. And its vector is unpredictable. Studying the past doesn't necessarily make it easier to foresee the future. It may, however, provide richer context for some of today's technologies. Or maybe just today's technical debt. Either way, our current state-of-the-art will soon be a retro curiosity relegated to a used book bargain bin. Hopefully for the better.

Read a free technical book! I'm fulfilling a lifelong dream and writing a book. It's about developing secure and robust systems software. Although a work-in-progress, the book is freely available online (no paywalls or obligations): https://highassurance.rs/